By Ian Morris

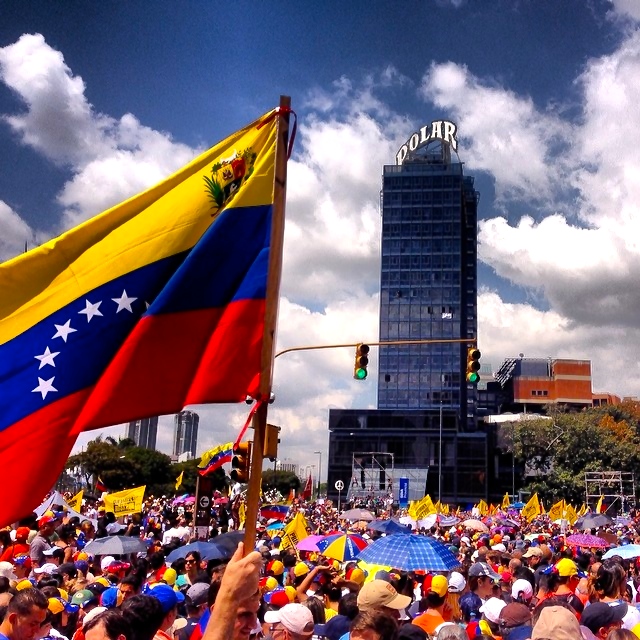

“No-one pretends that democracy is perfect or all-wise,” Winston Churchill observed in 1947. “Indeed, it has been said that democracy is the worst form of Government except all those other forms that have been tried from time to time.” And most people tend to agree with Churchill’s sentiment that nothing beats the wisdom of the crowd. In 2007, EU polls found that around the world, regardless of country, continent, age, gender or religion, about 80 percent of respondents believed democracy was the best way to run a society.

And yet, very few people felt this way until very recently. Throughout most of recorded history, democracy has consistently equated to mob rule. Even in November 1787, a mere two months after playing a leading role in drafting the U.S. Constitution, James Madison took it for granted that “democracies have ever been spectacles of turbulence and contention.”

To me, this raises one of the biggest but least asked questions in global politics: Should we assume that we are cleverer than our predecessors and that we have finally figured out the best way of organizing communities, regardless of their circumstances? Or should we assume that because democracy has a history, it — like everything else in history — will someday pass away?

The Technological Challenge to Democracy

I have been thinking about this question a lot since this spring, when I attended a dinner hosted by Stanford University’s cybersecurity committee on how cybersocial networks might affect democracy. (Serving on committees is generally the worst part of an academic’s life, but some can be rather rewarding by exposing their members to a range of new ideas.) My fellow diners were mostly members of Stanford’s political and computer science departments, and much of the conversation centered on the details of designing better voting machines or networking citizens for online town hall meetings. But some of the talk rose above the minutiae and into grander, more abstract speculation on what supercomputers and our growing ability to crunch large data sets might mean for the voice of the people.

The past decade has seen huge advances in algorithms for aggregating and identifying preferences. We have all become familiar with one of the more obvious consequences of this trend: Personalized ads, tailored to our individual browsing histories, pop up unbidden on our computer screens on a regular basis. But research has moved beyond studying its effects on decisions about consumption and on to decisions about justice and politics. When tested against the 68,000 individual votes that have been cast in the U.S. Supreme Court since 1953, a computer model known as {Marshall}+ correctly predicted the outcomes of 71 percent of cases. Meanwhile, an algorithm designed at University of Warwick predicted the outcome of the 2015 British election with 91 percent accuracy by analyzing political tweets.

Admittedly, there is still a long way to go. {Marshall}+ is good, but it cannot compete with Jacob Berlove, a 30-year-old resident of Queens, N.Y., who has no formal legal or statistical training but is able to predict the Supreme Court’s votes with 80 percent accuracy. And while the Twitter-analyzing algorithm got many things right about the British vote, it was utterly wrong on the one thing that really mattered: the relative tallies of the Labor and Conservative parties.

But as technologists like to say, we ain’t seen nothing yet. Algorithms are still in their infancy, and I would guess that by the time self-driving cars become a common sight on our roads (2020, according to Google; 2025, according to most auto manufacturers), our computers will have a better idea of what we want our politicians to do than we do ourselves. And should that come to pass, we might expect two additional predictions to play out as well.

The first seems uncontroversial: Even fewer people will bother to vote. In the United States’ 2012 presidential election, only 58 percent of citizens took the time to exercise their right to vote. In the 2014 midterm elections, that figure dropped to 36 percent. Other Organization for Economic Cooperation and Development countries aren’t much better: On average, only seven of every 10 voters participate in member states’ elections. In Switzerland’s 2011 legislative elections, only four of every 10 voters participated. How much further should we expect participation to fall when technocrats confirm citizens’ suspicions that their votes don’t really matter?

The second prediction is even more ominous: When our computers know best what we want from politicians, it won’t be a big leap to assume that they will also know better than the politicians what needs to be done. And in those circumstances, flesh-and-blood politicians with all their imperfections may start to seem as dispensable as the elections that we have thus far used to choose them.

Omniscient computers that run the world are a staple of science fiction, but they are not what we are talking about here. As Churchill said, political machines do not need to be perfect or all-wise, just less error-prone, ignorant and/or venal than democracy or any of those other forms of government that have been tried from time to time.

Science fiction stories also often feature people rising up in arms against machines, violently overthrowing their too-clever-by-half computers before they take over mankind’s future. But I suspect that this scenario has no more connection with reality than Isaac Asimov’s Foundation trilogy, one of my favorite science fiction renditions of the all-knowing computer that controls human society. After all, we already casually entrust our lives to algorithms. There was a time when people worried about computers controlling traffic lights, but because they did such a good job of it, that fear eventually subsided. Now, some worry about driverless cars in much the same way, but these fears will also fade if the vehicles cut the number of fatalities on the road by 99 percent by 2050, as McKinsey consultants have predicted. In doing so, they would save 30,000 lives and $190 billion in health care costs each year in the United States alone.

My guess is that humanity will almost certainly never face a momentous, once-and-for-all choice between following our instincts or following algorithms. Rather, we will be salami-sliced into a post-democratic order as machines prove themselves to be more competent than us in one area of life after another.

How Democracy Rises and Falls

One reason it is so difficult to forecast where this trend might take us is that there is a shortage of good historical comparisons that would allow us to see how other people have coped with similar issues. There is, in fact, really only one: ancient Greece.

The use of historical analogies is a problem in its own right, because one of the most common blunders in policymaking is drawing simplistic, 1:1 correlations between a current event and a single case in the past. Noting similarities between contemporary problems and some earlier episode (say, between Russian President Vladimir Putin’s moves in Ukraine and Hitler’s actions in the Sudetenland), strategists regularly — and often erroneously — conclude that because action A led to result B in the past, it will do so again in the present. The flaw in that sort of thinking is that for every similarity that exists between 1938 and 2015, there are also dozens of differences. The only way to know if the lessons we are drawing from history are right is to look at a large number of cases, identifying broad patterns that reveal not only general trends but also the kinds of forces that might undermine them, causing a specific case to turn out much differently than we might expect.

But because democracy has been an extremely rare occurrence, examples of societies abandoning democracy are also extremely rare. Therefore, we have little alternative to comparing our own world with ancient Greece. The analogy, though only able to provide limited insight, is still rather illuminating.

Ancient Greece differed from the modern world in many ways, the most obvious of which were its low levels of technology and its small scale. (Even a relatively huge Greek city-state like fifth-century Athens had only 350,000 residents.) Not surprisingly, its democracy differed too: Rights were restricted to free adult males, who made up about 20 percent of the population, and democratic values regularly coexisted alongside slavery. Greek democracy thus had more in common with 1850s Virginia than with today’s United States.

And yet, ancient and modern democracies have all shared the same basic logic. Since around 3000 B.C. — as far back as our evidence dates — nearly all complex, hierarchical societies have rested on the belief that a few people have privileged access to a supernatural sphere. From Egypt’s first pharaoh to France’s Louis XIV, we find the same claim repeated and largely accepted: Because we kings and our priests know what God or the gods want, it makes perfect sense for the rest of you to do what we say. But for reasons that continue to be debated, many Greeks began rejecting this idea between about 750 B.C. and 500 B.C. Around A.D. 1500-1750, many northwestern Europeans (and their colonists in North America) went down a similar path. Both sets of revolutionaries then had to confront the same pair of questions: If no one really knows what God or the gods want, how can we tell what to do and how to run a good society?

Both groups gradually drifted toward similar answers. Even though no one knows so much that we can leave all the big decisions to him alone, they said, each of us (or us men) knows a little bit. Pooling our wisdom won’t necessarily produce the right or best answers, but it should nevertheless produce the least bad answers possible under the circumstances. Bit by bit, Greek city-states in the sixth century B.C. transferred power from the aristocratic councils to broader assemblies of male citizens. By the fifth century B.C., they had begun calling their new constitution dêmokratia, which literally means “people power.” Similarly, between the late 18th and 20th centuries, European states and their overseas colonists led the way in gradually shifting power from royals and nobles to representatives of the people, eventually going beyond the Greeks by outlawing slavery and enfranchising women.

In both cases, democracy rested on the twin pillars of efficiency and justice. On the one hand, the unavailability of god-given rulers meant that listening to the voice of the people was the most efficient way to figure out what to do; on the other, if everyone knows something but no one knows everything, the only just system is one that gives everyone equal rights.

In Greece, this consensus began breaking down around 400 B.C. under the strain of the Peloponnesian War between Athens and Sparta. A few military leaders, including the Spartan Adm. Lysander, accumulated so much power that they seemed to surpass ordinary mortals, and philosophers like Plato began to question the wisdom of the crowd. What if, Plato asked, democracy pools not our collective insights but our collective ignorance, leading not to the least bad solutions but to the least good? What if the only way to find truth is to rely on the thinking of a tiny number of truly exceptional beings?

The answer came in the persons of Philip the Great and his son, Alexander. The former conquered the whole of mainland Greece between 359 B.C. and 338 B.C., while the latter did the same to the entire Persian Empire by 324 B.C. Both men aggressively pushed the idea that they were superhuman. In one famous story, set around the border between modern-day India and Pakistan, Alexander summoned a group of Hindu sages to test their wisdom. “How can a man become god?” he asked one of them. The sage responded, “By doing something a man cannot do.” It is hard not to picture Alexander then asking himself who, to his knowledge, had done something a man cannot do. The answer must have been unavoidable: “Me. I, Alexander, just conquered the entire Persian Empire in 10 years. No mere mortal could do that. I must be divine.”

Emboldened, Alexander proclaimed at the Olympic Festival in 324 B.C. that all Greeks should worship him as a god. Initially, the people responded with bemusement; Athenian politician Demosthenes said, “All right, make him the son of Zeus — and of Poseidon too, if that’s what he wants.” But the sheer scale of Alexander’s achievements had genuinely shattered the assumptions of the previous two centuries. A new elite was concentrating such wealth and power into its hands that its members really did seem superhuman. When Demetrius the Besieger, the son of one of Alexander’s generals, seized Athens in 307 B.C., Athenians rushed to offer him divine honors as the “Savior God,” and over the next 100 years, worshipping kings became commonplace. Plenty of city-states — more than ever before, in fact — liked to call themselves dêmokratiai, but small elite circles with good connections to the kings’ courts took control of all important decisions.

Democracy swept Greece after 500 B.C. because it solved the specific problem of how to know what to do and run a good society if no one had privileged access to divine wisdom. It unraveled around 300 B.C. because the achievements of Alexander and his successors seemed to show that some people did in fact have such access. Democracy’s claim to be the most efficient form of government looked like nonsense once large numbers of Greeks began to believe that their leaders were the sons of gods; its claim that justice meant giving every man equal rights looked equally ludicrous when some men, thought to be demigods, so obviously seemed to deserve more rights than others.

Democracy: A Solution to Particular Problems

While it is highly unlikely that the 21st-century world will follow the same path as Greece in the fourth and third centuries B.C., I will close by suggesting that there nevertheless might be an important lesson in this ancient analogy. Democracy is not a timeless, perfect political order; it is a solution to particular problems. When these problems disappear, as they eventually did in ancient Greece, democracy’s claims to superior efficiency and justice can vanish with them.

Democracy has a solid foundation to its claims of being the most efficient and just solution to the particular challenges of the 19th and 20th centuries. But now, we are making machines that have much stronger claims to godlike omniscience than either Philip or Alexander had. Economist Thomas Piketty already worries about the growing gap between rich and poor that “automatically generates arbitrary and unsustainable inequalities that radically undermine the meritocratic values on which democratic societies are based,” but in terms of significance, these pale in comparison to the consequences of creating new forms of intelligence that dwarf anything humanity is capable of. Put simply, the end of democracy may be one of the least shocking changes that the 21st century will bring.